Connectivity v1

Information about secure network communications in a PGD cluster includes:

- Services

- Domain names resolution using fully qualified domain names (FQDN)

- TLS configuration

Notice

Although these topics might seem unrelated to each other, they all participate in the configuration of the PGD resources to make them universally identifiable and accessible over a secure network.

Services

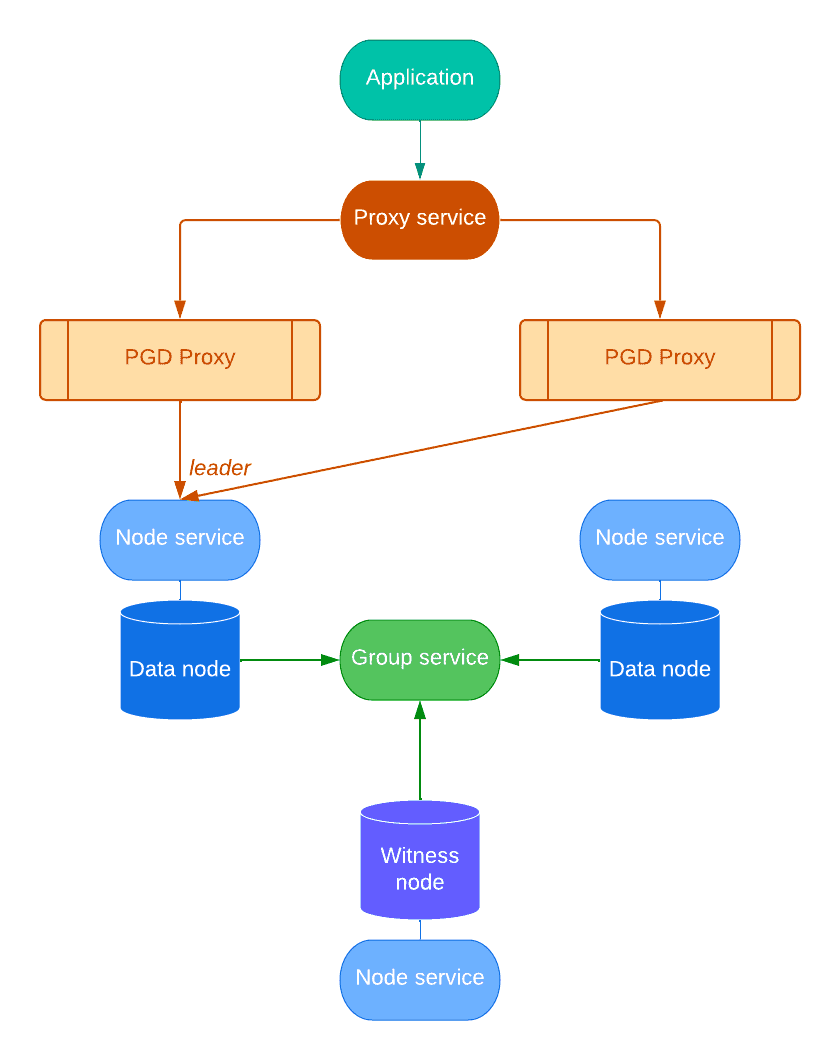

Resources in a PGD cluster are accessible through Kubernetes services. Every PGD group manages several of them, namely:

- One service per node, used for internal communications (node service).

- A group service to reach any node in the group, used primarily by EDB Postgres Distributed for Kubernetes to discover a new group in the cluster.

- A proxy service to enable applications to reach the write leader of the group transparently using PGD Proxy.

- A proxy-r service to enable applications to reach the read nodes of the

group transparently using PGD Proxy. This service is disabled by default

and controlled by the

.spec.proxySettings.enableReadNodeRoutingsetting.

For an example that uses these services, see Connecting an application to a PGD cluster.

Each service is generated from a customizable template in the .spec.connectivity

section of the manifest.

All services must be reachable using their FQDN from all the PGD nodes in all the Kubernetes clusters. See Domain names resolution.

EDB Postgres Distributed for Kubernetes provides a service templating framework that gives you the availability to easily customize services at the following three levels:

Node Service Template

: Each PGD node is reachable using a service that can be configured in the

.spec.connectivity.nodeServiceTemplate section.

Group Service Template

: Each PGD group has a group service that's a single entry point for the

whole group and that can be configured in the

.spec.connectivity.groupServiceTemplate section.

Proxy Service Template

: Each PGD group has a proxy service to reach the group write leader through

the PGD proxy and can be configured in the .spec.connectivity.proxyServiceTemplate

section. This is the entry-point service for the applications.

Proxy Read Service Template

: Each PGD group has a proxy service to reach the group read nodes through

the PGD proxy. Can be enabled by .spec.proxySettings.enableReadNodeRouting,

and can be configured in the .spec.connectivity.proxyReadServiceTemplate

section. This is the entry-point service for the applications.

You can use templates to create a LoadBalancer service or to add arbitrary annotations and labels to a service to integrate with other components available in the Kubernetes system (that is, to create external DNS names or tweak the generated load balancer).

Domain names resolution

EDB Postgres Distributed for Kubernetes ensures that all resources in a PGD group have a FQDN by adopting a convention that uses the PGD group name as a prefix for all of them.

As a result, it expects you to define the domain name of the PGD group. This

can be done through the .spec.connectivity.dns section, which controls how the

FQDN for the resources are generated with two fields:

domain— Domain name for all the objects in the PGD group to use (mandatory).hostSuffix— Suffix to add to each service in the PGD group (optional).

TLS configuration

EDB Postgres Distributed for Kubernetes requires that resources in a PGD cluster communicate over a secure connection. It relies on PostgreSQL's native support for SSL connections to encrypt client/server communications using TLS protocols for increased security.

Currently, EDB Postgres Distributed for Kubernetes requires that cert-manager is installed. Cert-manager was chosen as the tool to provision dynamic certificates given that it's widely recognized as the standard in a Kubernetes environment.

The spec.connectivity.tls section describes how the communication between the

nodes happens:

modeis an enumeration describing how the server certificates are verified during PGD group nodes communication. It accepts the following values, as documented in SSL Support in the PostgreSQL documentation:verify-fullverify-carequired

serverCertdefines the server certificates used by the PGD group nodes to accept requests. The clients validate this certificate depending on the passed TLS mode. It accepts the same values asmode.clientCertdefines thestreaming_replicauser certificate used by the nodes to authenticate each other.

Server TLS configuration

The server certificate configuration is specified in the .spec.connectivity.tls.serverCert.certManager

section of the PGDGroup custom resource.

The following assumptions were made for this section to work:

- An issuer

.spec.connectivity.tls.serverCert.certManager.issuerRefis available for the domain.spec.connectivity.dns.domainand any other domain used by.spec.connectivity.tls.serverCert.certManager.altDnsNames. - There's a secret containing the public certificate of the CA

used by the issuer

.spec.connectivity.tls.serverCert.caCertSecret.

The .spec.connectivity.tls.serverCert.certManager is used to create a per-node

cert-manager certificate request.

The resulting certificate is used by the underlying Postgres instance

to terminate TLS connections.

The operator adds the following altDnsNames to the certificate:

$node$hostSuffix.$domain$groupName$hostSuffix.$domain

Important

It's your responsibility to add to .spec.connectivity.tls.serverCert.certManager.altDnsNames

any name required from the underlying networking architecture,

for example, load balancers used by the user to reach the nodes.

Client TLS configuration

The operator requires client certificates to be dynamically provisioned using cert-manager (the recommended approach) or pre-provisioned using secrets.

Dynamic provisioning via cert-manager

The client certificates configuration is managed by the .spec.connectivity.tls.clientCert.certManager

section of the PGDGroup custom resource.

The following assumptions were made for this section to work:

- An issuer

.spec.connectivity.tls.clientCert.certManager.issuerRefis available and signs a certificate with the common namestreaming_replica. - There's a secret containing the public certificate of the CA

used by the issuer

.spec.connectivity.tls.clientCert.caCertSecret.

The operator uses the configuration under .spec.connectivity.tls.clientCert.certManager

to create a certificate request per the streaming_replica Postgres user.

The resulting certificate is used to secure communication between the nodes.

Pre-provisioned certificates via secrets

Alternatively, you can specify a secret containing the pre-provisioned

client certificate for the streaming replication user through the

.spec.connectivity.tls.clientCert.preProvisioned.streamingReplica.secretRef option.

The certificate lifecycle in this case is managed entirely by a third party,

either manually or automated, by updating the content of the secret.

Connecting to a PGD cluster from an application

Connecting to a PGD group from an application running inside the same Kubernetes cluster

or from outside the cluster is a simple procedure. In both cases, you connect to

the proxy service of the PGD group as the app user. The proxy service is a LoadBalancer

service that routes the connection to the write leader or read nodes of the PGD group,

depending on the proxy service it's connecting to.

Connecting from inside the cluster

When connecting from inside the cluster, you can use the proxy service name to connect

to the PGD group. The proxy service name is composed of the PGD group name and the optional

host suffix defined in the .spec.connectivity.dns section of the PGDGroup custom resource.

For example, if the PGD group name is my-group, and the host suffix is .my-domain.com,

the proxy service name is my-group.my-domain.com.

Before connecting, you need to get the password for the app user from the app user

secret. The naming format of the secret is my-group-app for a PGD group named my-group.

You can get the username and password from the secret using the following commands:

kubectl get secret my-group-app -o jsonpath='{.data.username}' | base64 --decode kubectl get secret my-group-app -o jsonpath='{.data.password}' | base64 --decode

With this, you have all the pieces for a connection string to the PGD group:

postgresql://<app-user>:<app-password>@<proxy-service-name>:5432/<database>

Or, for a psql invocation:

psql -U <app-user> -h <proxy-service-name> <database>

Where app-user and app-password are the values you got from the secret,

and database is the name of the database you want to connect

to. (The default is app for the app user.)

Connecting from outside the Kubernetes cluster

When connecting from outside the Kubernetes cluster, in the general case, the Ingress resource or a load balancer is necessary. Check your cloud provider or local installation for more information about their behavior in your environment.

Ingresses and load balancers require a pod selector to forward connection to the PGD proxies. When configuring them, we suggest using the following labels:

k8s.pgd.enterprisedb.io/group— Set the PGD group name.k8s.pgd.enterprisedb.io/workloadType— Set topgd-proxy.

If using Kind or other solutions for local development, the easiest way to access the PGD group from outside is to use port forwarding to the proxy service. You can use the following command to forward port 5432 on your local machine to the proxy service:

kubectl port-forward svc/my-group.my-domain.com 5432:5432Where my-group.my-domain.com is the proxy service name from the previous example.